Decoding Civilization: The Role of Code in Human Progress

In the modern world, code is everywhere—from the apps on our smartphones to the systems that manage our daily lives. But the story of code goes beyond the present. It’s a tale intertwined with the evolution of human civilization itself. This article explores how coding has shaped human progress, from early innovations to contemporary advancements, and why understanding its role is crucial for appreciating our technological heritage.

The Dawn of Code: Early Innovations

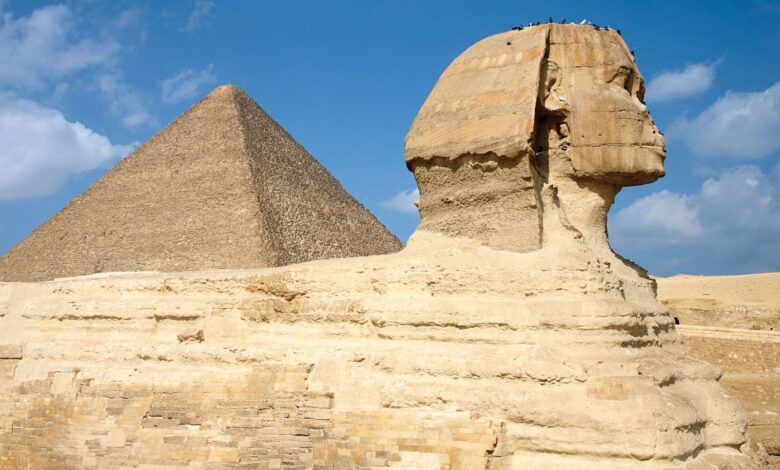

Ancient Foundations

According to this blog post, before computers and modern programming languages existed, early humans used basic forms of coding. The earliest known example is the Babylonian cuneiform script, which dates back to around 3000 BCE. Although not code in the modern sense, it was a system of recording information that laid the groundwork for more complex systems.

Similarly, the use of the abacus in ancient China and Greece demonstrated early attempts at mechanizing calculations. These tools represented an early form of coding, where symbols and positions represented numerical values, setting the stage for more sophisticated programming systems.

The Birth of Modern Computing

The 19th century brought significant advancements with the development of mechanical calculators and early computers. Charles Babbage’s Analytical Engine, conceptualized in the 1830s, is often considered the first mechanical computer. Ada Lovelace, working with Babbage, wrote the first algorithm intended for this machine, making her one of the world’s first programmers. Her work highlighted the potential of coding as a means to execute complex calculations and paved the way for future innovations.

The 20th Century: The Rise of Digital Code

The Advent of Electronic Computers

The 20th century marked a transformative era in coding with the advent of electronic computers. Alan Turing, a mathematician and logician, developed the concept of the Turing machine, a theoretical construct that underpins the modern understanding of computation. Turing’s ideas influenced the development of early computers and programming languages, establishing a foundation for contemporary coding practices.

The 1940s and 1950s saw the emergence of programming languages like Fortran and COBOL, designed to simplify coding for scientific and business applications. These languages represented a significant leap from machine code and assembly language, allowing programmers to write more complex and efficient code.

The Personal Computer Revolution

The 1970s and 1980s brought the personal computer revolution, with companies like Apple and Microsoft making computing accessible to the general public. Introducing user-friendly operating systems and programming languages. Such as BASIC and later C++, democratized coding and opened new possibilities for software development.

This era also saw the birth of the Internet, revolutionizing how we share information and communicate. The creation of HTML and the early web browsers made building and navigating the World Wide Web possible, forever changing the landscape of information and connectivity.

The 21st Century: Code in Everyday Life

Ubiquity of Code

In the 21st century, code has become integral to virtually every aspect of daily life. From mobile apps and social media platforms to sophisticated algorithms driving artificial intelligence. Coding powers the technologies we rely on daily. The proliferation of smartphones and the Internet of Things (IoT) has further embedded code into our lives. Enabling smart devices and interconnected systems.

Innovations and Challenges

Recent years have seen rapid advancements in coding and technology, with innovations such as machine learning, blockchain, and quantum computing. These technologies promise to reshape industries and create new opportunities. But they also pose challenges related to privacy, security, and ethical considerations.

For instance, machine learning algorithms can analyze vast amounts of data to make predictions and decisions. But they also raise concerns about bias and transparency. Blockchain technology offers the potential for secure transactions and decentralized systems but requires careful management and regulation. Quantum computing, while still in its early stages, has the potential to revolutionize problem-solving capabilities but also presents new security challenges.

The Human Element: Code and Society

Empowering Individuals

Coding has empowered individuals to create and innovate in ways previously unimaginable. Platforms like GitHub allow programmers to collaborate on projects and share code, fostering a global community of developers. Open-source software has enabled people to contribute to and benefit from a shared pool of resources. Driving innovation and reducing barriers to entry in technology.

Education and Inclusivity

Education plays a crucial role in fostering a new generation of coders and technologists. Initiatives like Code and various coding boot camps aim to make programming more accessible to people of all ages and backgrounds. By providing resources and support for learning to code. These programs help bridge the digital divide and promote inclusivity in the tech industry.

Conclusion

The role of code in human progress is both profound and multifaceted. From ancient record-keeping systems to modern artificial intelligence, coding has driven technological advancement and societal change. As we look to the future, understanding the historical context and ongoing impact of coding helps us appreciate its significance and potential.

By recognizing the contributions of early innovators and embracing the opportunities presented by contemporary technologies. We can continue to harness the power of code to shape a better, more connected world. As coding becomes increasingly embedded in our lives, its role in driving progress and innovation will grow more crucial.